Our lab name captures the essence of our research:

If you set your Mind to Motion, you can achieve great things; referring to our incredible ability to learn new skills, regain function after trauma, and find creative solutions.

The dynamic stimuli in our environment set our Mind to Motion; some of which trigger thought or action, others pass by without being noticed.

Our Mind literally brings us to Motion by moving our body, enabling object manipulation and social interaction, the core of our existence.

Moreover, our lab name is a small tribute to Dr. Verbaarschot's mom, M.M., a talented physical therapist who sparked a fascination for movement in her daughter.

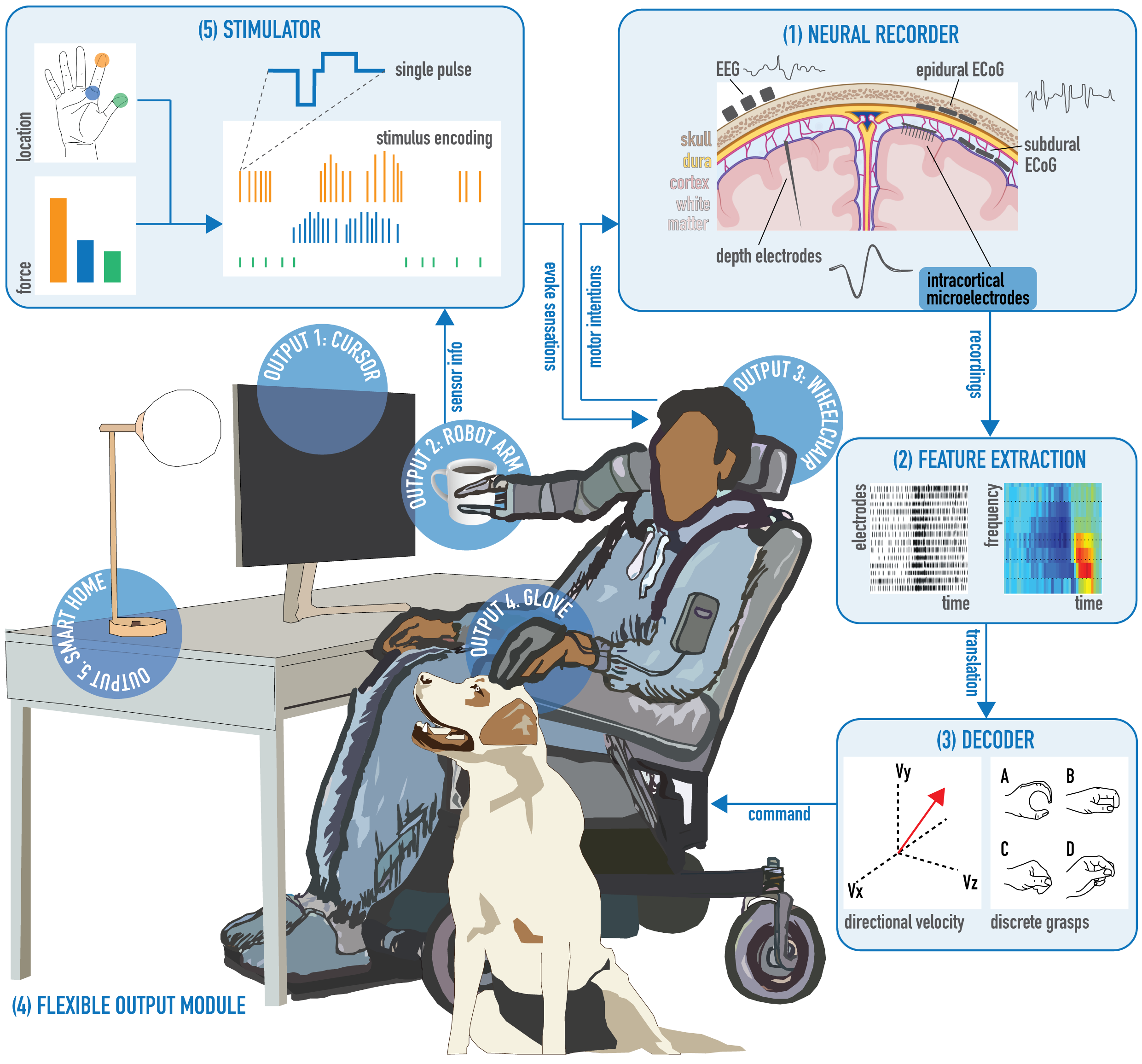

Bidirectional Brain-Machine Interfaces

Brain-Machine Interfaces (BMIs) have achieved impressive functional results; enabling people with severe paralysis to control, e.g., a robotic arm with their brain to interact with their direct environment. Moreover, via intracortical micro-stimulation of the brain, localized touch percepts can be felt on a person's paralyzed arm, where the robotic arm is being touched. By combining both brain decoding and stimulation, one can create a robotic arm that both feels and moves like a person's own arm. In addition to the evident societal and clinical relevance of restoring autonomy and social interaction in individuals with limited sensory and movement capabilities, this also shows how BMIs can be a powerful tool to better understand human cognition. The real-time analysis of ongoing brain activity provides the ideal means to investigate the complex relation between neural processing and conscious experience.

In our lab, we develop bidirectional BMIs that can assist, restore or enhance a person's sensory and movement capabilities. To do so, we make use of implanted electrodes that can both record from and stimulate the brain. Identifying which factors enable a seamless interaction between a user and their device requires a deep understanding of both the device and the human experience of interacting with that device. At UT Southwestern Medical Center, we have the unique opportunity to study the sensorimotor system using a diverse set of neuroimaging methods in people with and without paralysis:

- Temporary stereotactic electroencephalogrography (sEEG) implants combined with non-invasive haptic feedback in people undergoing epilepsy treatment, provide a wide view into the neural underpinnings of (multi-sensory) perception, decision making, conflict resolution and error processing.

- A combination of temporary deep microelectrode implants, superficial electrocortigography (ECoG) implants and actual touch in people undergoing Deep Brain Stimulation (DBS) treatment for Parkinsons and essential tremor, provide an in-depth view of somatosensory expectation and perception during motor control.

- Lastly, our own clinical trial makes use of implanted microelectrodes for recording and stimulation to gain a detailed view of the neural pathway from perception and decision making to motor preparation and attempted performance in people with tetraplegia. By looking beyond the primary somatosensory and motor cortex, including information from posterior parietal and dorsolateral prefrontal cortex, we create a multi-level decoder system suitable for intention detection, motor trajectory decoding, error correction, and task-switching.

Each of these participant groups provides one piece of the puzzle, allowing us to study the principles of sensory perception and movement generation in people with intact sensory and motor function and translate these to high-impact applications for the assistance and restoration of sensation and movement in the upper limb of people with paralysis. We exploit our knowledge of artificial intelligence and cognitive neuroscience to create personalized BMIs. On the one hand, we create an adaptive stimulator that can leverage the neural response to a natural or artificial somatosensory stimulus to optimize its target characteristics without the need of explicit calibration time. On the other hand, we create an adaptive decoder that can learn from the user and with the user as they engage in a novel motor task. Through unsupervised learning strategies and inferred goal states, we strive to create ready-to-go systems with a consistently high accuracy. We believe that such self-learning bidirectional systems can provide the desired effortless and meaningful assistance during daily activities, increasing a person’s autonomy and interpersonal connection.

Schematic of a bidirectional BMI. Ongoing neural signals are captured using a (1) neural recorder (brain image is created via BioRender.com). A (2) feature extraction process then finds the neural signals of interest. A (3) decoder takes these extracted features and translates them into commands. These commands can be flexibly mapped to (4) various output modules such as an assistive soft-glove, a robotic arm, wheelchair, cursor, or smart controls in one’s house (the illustrated person and wheelchair were created by Patrick Verbaarschot). Localized pressure sensors on various devices can be used to translate interaction effects into (5) neural stimulation patterns that evoke context-specific sensory experiences.