Human Error Detection and Prevention

After heart disease and cancer, medical errors are the third leading cause of death in the US. In an era of where computer-aided or automated decision making is becoming the industry standard, it is staggering to learn that many quality assurance and error detection processes are done manually by humans.

The reason for this is in the perceived complexity of the tasks and systems being checked. An expert medical professional can quickly identify difficult-to-quantify errors and make the corresponding corrections. However, not every error is noticed, or even checked.

As treatment complexity increases, and medical professionals are required to spend additional time assessing more and more voluminous data, the effectiveness of having humans responsible for policing the entire treatment workflow diminishes.

At the heart of using AI for human error detection and prevention is: can we use AI techniques to identify difficult-to-quantify errors as a human expert does? Current industry efforts are being made to automate patient treatment plan checking, but are largely centered on human-programmed rule-based checks.

In order to develop for a comprehensive error checking solution, we explore more advanced learning methods. Our goal is to develop an AI-based error detection system that can reside in any electronic medical record or treatment management systems to automatically detect any medical errors.

Publications

- Yang Q, Chao H, Nguyen D, Jiang S. (2019) Mining domain knowledge: Improved framework towards automatically standardizing anatomical structure nomenclature in radiotherapy. (arXiv) (journal)

- Rozario T, Long T, Chen M, Lu W, Jiang S. (2017) Towards automated patient data cleaning using deep learning: A feasibility study on the standardization of organ labeling. (arXiv)

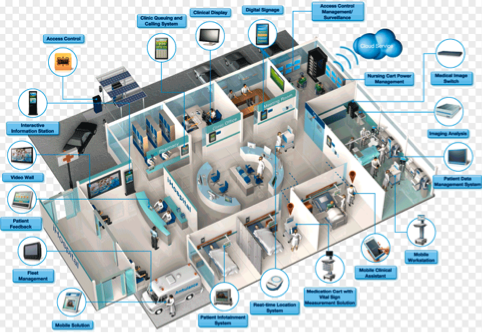

Illustration of smart clinic

Illustration of smart clinic Wearable Sensors and Smart Clinic

Recent advances in telecommunications, microelectronics, and sensor manufacturing techniques—especially with miniature circuits, microcontroller functions, front-end amplification, and wireless data transmission—have created substantial possibilities for using wearable technology for biometric monitoring.

These physical, chemical, and biological sensors allow for continuous recording of health data, allowing for powerful analytics to monitor and predict patient condition, activity, location, etc. Furthermore, the passive nature of data collection from sensors unlocks the door for big data in health care, and subsequently, artificial intelligence (AI) in medicine.

The success of AI applications has hinged on the availability of big data, a feature not commonly found in health care. Wearable sensors will generate big data, providing the opportunity for AI to revolutionize many medical fields, such as assisted care, management of chronic diseases, as well as the development of so-called smart clinic.

Currently, we are working on the development of a Real Time Location System (RTLS) that tracks the locations of patients, clinical staff, and assets in real time to greatly improve the clinical workflow and patient safety. The system is constructed with a sensor network based on Bluetooth Low Energy (BLE) technology.

Traditional locating algorithms rely on characterizing the node-tag signal attenuation curves, and then using trilateration, multilateration, or triangulation to obtain the tag coordinates. We found that by using these more conventional methods, the tracking accuracy was unable to satisfy the clinical requirements.

We now look toward using deep learning methods to solve the RTLS problem at higher precision previously unobtainable by the established techniques. By assigning the node signal heat maps across the building as inputs, it is possible to train a deep learning model to identify tag locations.

Backboned by this node network, the long-term goal of this project is to develop an IoT system that collects and processes large sets of patient data via multi-function tags—an AI-assisted “smart clinic.”

Publications

- Tang G, Westover K, Jiang S. (2021) Contract tracing in healthcare settings during the COVID-19 pandemic using bluetooth low energy and artificial intelligence—a viewpoint. Front. Artif. Intell. (journal)

- Tang G, Yan Y, Shen C, Jia X, Zinn M, Trivedi Z, ... Jiang S. (2020) Balancing robustness and responsiveness in a real-time indoor location system using bluetooth low-energy technology and deep learning to facilitate clinical applications. (arXiv)

- Iqbal Z, Luo D, Henry P, Kazemifar S, Rozario T, Yan Y, ... Jiang S. (2018) Accurate real time localization tracking in a clinical environment using bluetooth low energy and deep learning. PloS One. (journal) (arXiv)

General AI Techniques and Methodologies

We are also interested in gaining insights of AI techniques, as well as investigating AI technologies and developing and translating AI methodologies for medical applications. We are currently focusing on the following two areas.

Human-like parameter tuning for optimization problems: A wide spectrum of tasks in medicine can be formulated as solving optimization problems. Examples include iterative CT reconstruction and radiotherapy treatment planning. In the optimization models for these problems, there are typically parameters, which govern solution quality, e.g. image quality or plan quality.

Conventionally, these parameters are manually tuned for the best results. Yet the tedious process and very often sub-optimal parameter selection impede clinical utilization of these optimization models. We are exploring reinforcement learning techniques to develop a smart system that can automatically tune parameters with human-level intelligence.

Distributed deep learning to break data barrier: Behind the success of each deep-learning study lies a tremendous amount of data for model training and validation. Nonetheless, when it comes to deep learning for a medical problem, the amount of data is sometimes limited.

This issue is exacerbated by the difficulty of sharing data among different medical institutions due to issues like patient privacy concerns. Recently, distributed deep learning offers a new angle to solve this problem. With the deep learning algorithm running at each institution using private patient data, while communicating with each other to share non-private algorithm data, it becomes possible to collectively perform learning activities effectively using all the data.

In this project, we are developing the infrastructure and distributed deep-learning algorithms to enable sharing knowledge among different institutions without sharing data. This will facilitate a number of deep learning methods for medical applications.

Publications

- Peng H, Moore C, Zhang Y, Saha D, Jiang S, Timmerman R. (2023) An AI-based approach for modeling the synergy between radiotherapy and immunotherapy. Res Square. (journal)

- Kwon YS, Dohopolski M, Morgan H, Garant A, Sher D, Rahimi A, Sanford N, Vo D, Albuquerque K, Kumar K, Timmerman R, Jiang S. (2023) Artificial intelligence–empowered radiation oncology residency education. Pract Rad Onc. (journal)